What’s the difference between machine vision and computer vision?

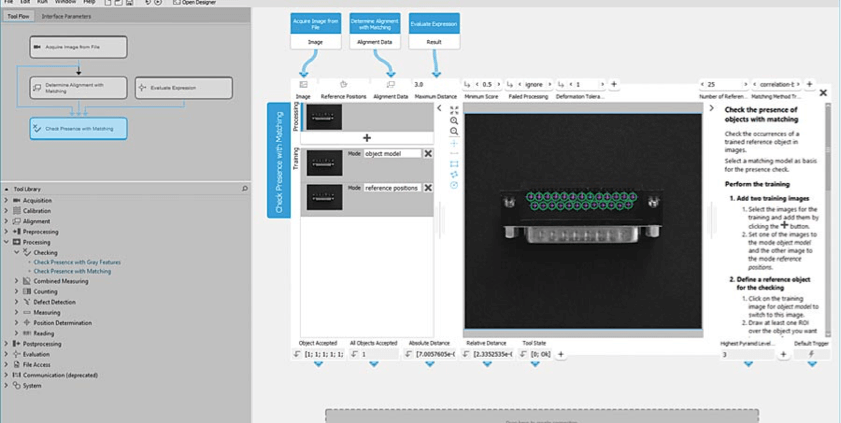

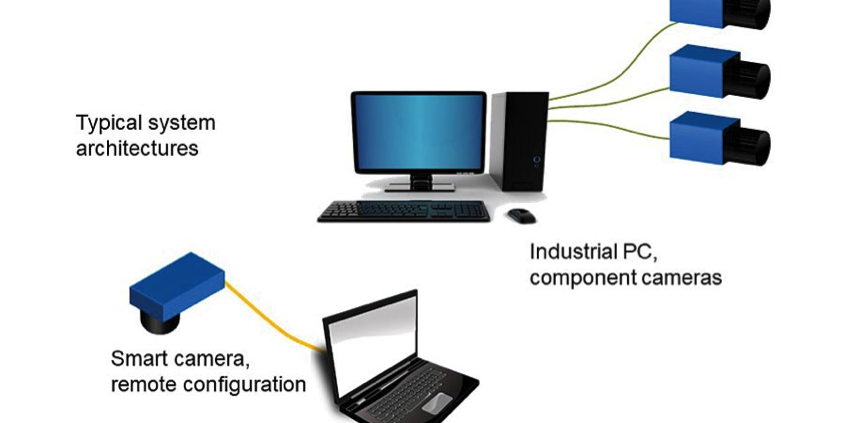

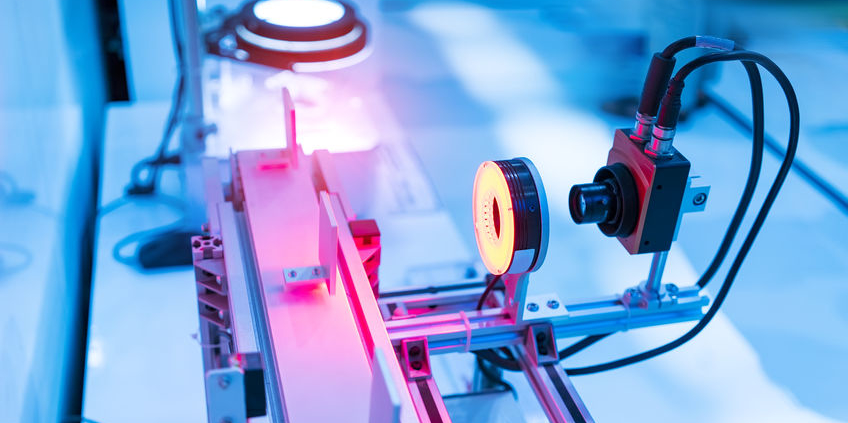

Computer vision and machine vision are overlapping technologies. A machine vision system requires a computer and specific software to operate while computer vision doesn’t need to be integrated with a machine. Computer vision can, for example, analyze digital online images or videos as well as “images” from motion detectors, infrared sensors or other sources, not just a photo or video. Machine vision is a sub-category of computer vision.